How It Works

By default, scraping requests are processed asynchronously. When a request is submitted, the system begins processing the job in the background and immediately returns a snapshot ID. Once the scraping task is complete, the results can be retrieved at your convenience by using the snapshot ID to download the data via the API. Alternatively, you can configure the request to automatically deliver the results to an external storage destination, such as an S3 bucket or Azure Blob Storage. This approach is well-suited for handling larger jobs or integrating with automated data pipelines.Body

The inputs to be used by the scraper. Can be provided either as JSON or as a CSV file:A JSON array of inputs

A CSV file, in a field called

Example: [{"url":"https://www.airbnb.com/rooms/50122531"}]

A CSV file, in a field called

data

Example (curl): data=@path/to/your/file.csv

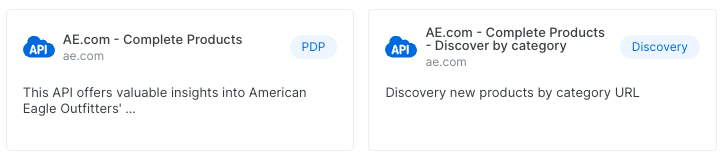

Web Scraper Types

Each scraper can require different inputs. There are 2 main types of scrapers:1. PDP

These scrapers require URLs as inputs. A PDP scraper extracts detailed product information like specifications, pricing, and features from web pages2. Discovery

Discovery scrapers allow you to explore and find new entities/products through search, categories, Keywords and more.

Request examples

PDP with URL input

Input format for PDP is always a URL, pointing to the page to be scraped.

Sample Request

Discovery input based on the discovery method

Sample Request

discovery can vary according to the specific scraper. Inputs can be: